Background

To overcome the limitation of double programming (list of limitations are mentioned in our previous post How automation can improve cross table validation, statisticians perform a manual check for consistency across tables. While this will improve quality, there are shortcomings.

The Challenge

Typically, the specifications for these consistency checks are not detailed, and sometimes no more than ‘check for consistency’. Therefore, not all possible checks are performed and there is inconsistency between the checking done by different statisticians or done by the same statistician at different times. As the checking is done manually, there is scope for human error, particularly when there is a large amount of checking to do, or the checks are particularly complex.

Doing a comprehensive consistency review is time consuming and is therefore not necessarily performed on all deliverables, for example Safety Updates, Data Monitoring Committee outputs, and after changes have been made to existing tables, or tables have been added, as a result of reviews.

Performing these checks is quite rightly performed by a statistician and can take several hours to do. This is time spent that could be used by statistician to perform tasks that are more satisfying and more challenging for them, thus improving their job satisfaction. From a company perspective, it would be financially beneficial if these resources could be freed up to perform more value-added tasks and the tasks could be performed automatically, with at least equal effectiveness.

Solution

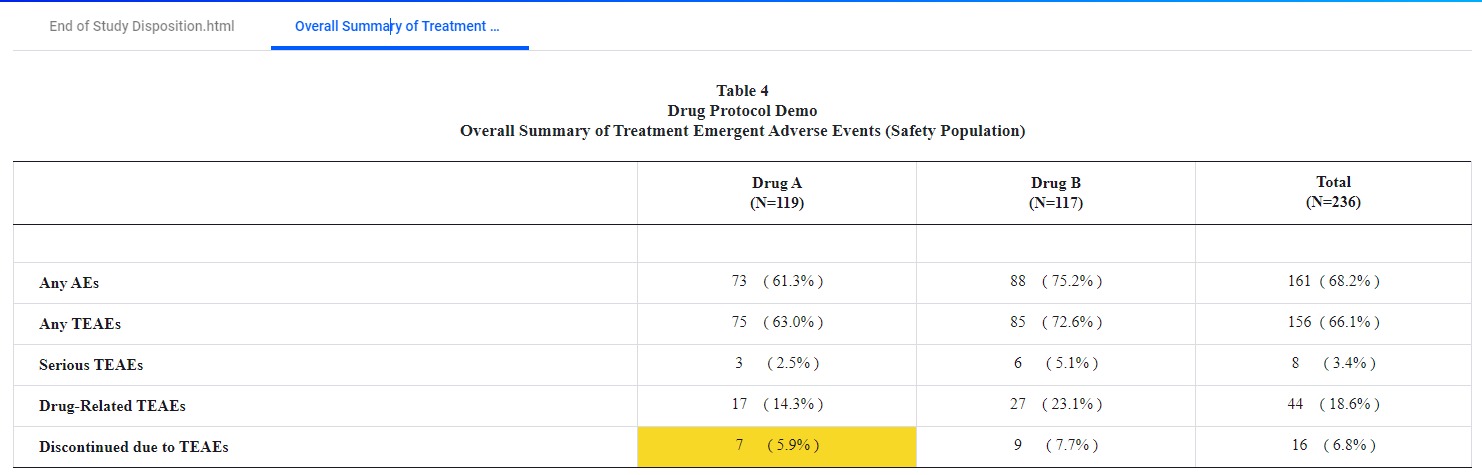

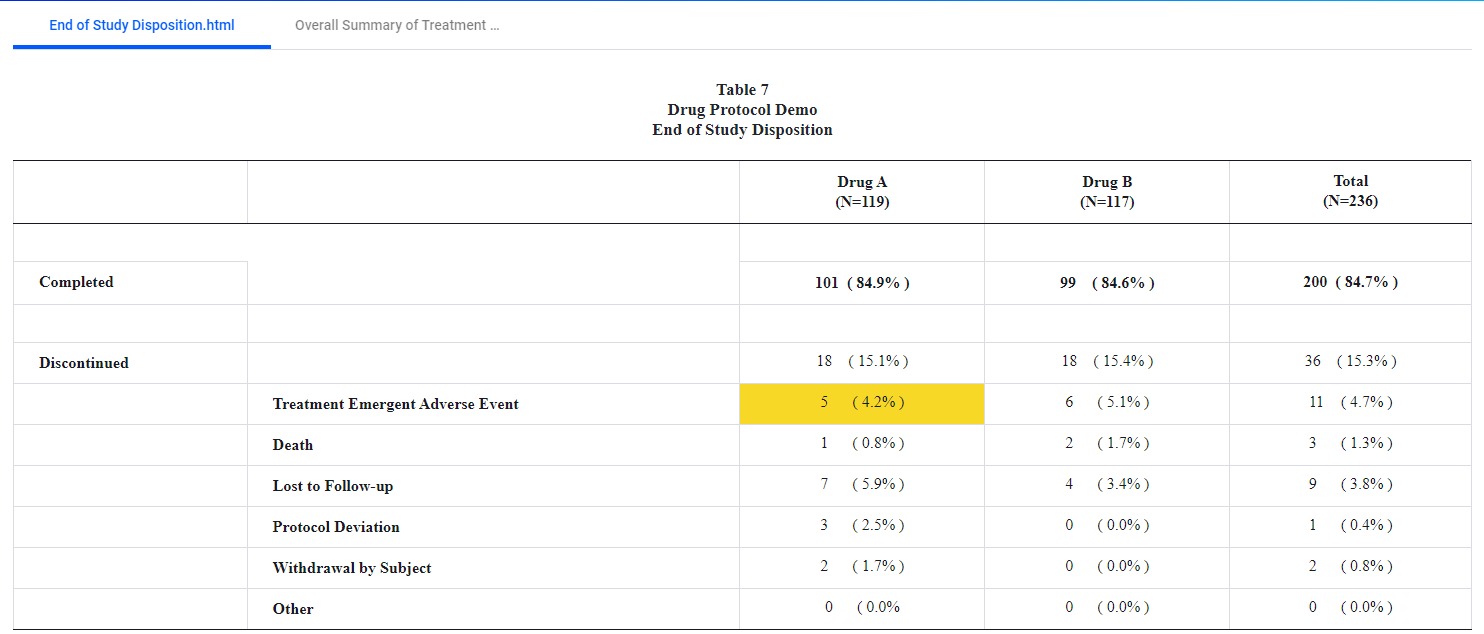

‘Verify’, a machine learning based tool, in addition to performing within table checks, can perform cross table checks quickly and consistently for all deliverables (Table 1&2).

The discrepancies are highlighted in the output so that the reviewer can immediately see where the discrepancies are.

Table 1

Table 2

This would be achieved by running a set of standard cross table checks defined by statisticians. The algorithms would determine whether a certain check was relevant for particular tables, so there would be no need to do study specific configuration. If a new check were required, it would be added to the standard set, so it was available for all studies, not just one. This automated approach would require a statistician to resolve discrepancies raised by ‘Verify’, a task often requiring judgment and knowledge of the study. There would also still be a benefit to a statistician reviewing the main results of the study at a high level, for example are the results in the tables consistent with the p-values. However, automation can:

- Greatly reduce the time and effort to perform across table checks

- Check the output comprehensively and consistently

- Improve job satisfaction

- Improve the productivity of statisticians.